You Should be More Skeptical When Reading Research

With an Example of a Widely Reported - But Very Misleading Study

Why You Should Be Skeptical

When evaluating research you should be skeptical. Peer review1 and publication in a good journal does not mean you can trust the findings as presented. It could technically accurate but misleading. Or just plain wrong. And this is not just a possibility - it’s more likely than not.

You may have heard of the replication crisis in science, but you may not be aware of the magnitude of the problem.

It was first acknowledged as a crisis in 2005 when John Ioannidis published Why Most Published Research Findings Are False. Ioannidis demonstrated that reliance on p-values means that the majority of studies won’t replicate.

That’s right - the majority of published research is likely inaccurate. This is because statistics like p-values are easy to manipulate. Researchers consciously or unconsciously trying to find a pattern or result that supports their hypothesis can very easily massage the data to prove whatever result they want.

P-Values

P-values work like this. You run a study and find there was an 8% improvement when taking this new drug over placebo. A p-value tells you how statistically significant the result is. If you had a p-value of .05 this means that if the drug, in fact, had no effect and we ran this study 100 times 5% of the time we would get this result showing the new drug was better than the placebo. When in reality there was no difference.

Just based on that you might think 5% of research might be false -but that’s the idealized version of p-values. In real life, it can be as simple to game as testing 100 things and only reporting the 5 that were statistically significant. Testing the same thing in multiple ways, or massaging the data into arbitrary groups.

As always - there is a relevant XKCD comic that illustrates this issue.

A Real World Example

Real studies aren’t as easy to see through as the XKCD comic’s illustration of different colored jelly beans. Let’s look at an example study.

A recently published study was actually the genesis of this write-up when it was sent to my family group chat. The study was reported with the headline These foods raise your risk of dementia — a lot

Butter. Hamburgers. Sausages. Store-bought pastries, cakes and cookies. Oh, and soda—sugary or diet. They’re all bad and they all raise our risk of getting dementia—by a lot.So reports one of the biggest and scariest studies ever

The study actually got a fair amount of media coverage - maybe you saw it on TV on the morning shows.

The principal authors both have doctorates in the field, and the journal it was published in is ranked among the top 10 general medical journals.

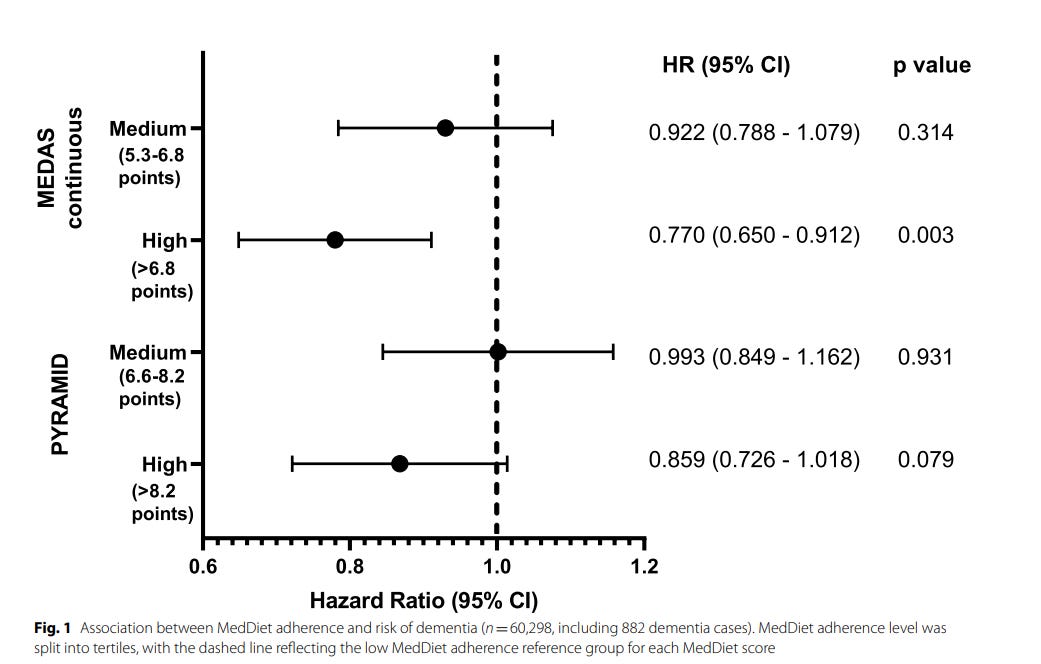

You can see the key finding - a high adherence to a Mediterranean diet (MEDAS continuous High) is associated with a 23% reduction in the risk of dementia summarized on the first page of the study.

More contextualized reporting might highlight that the overall incidence of dementia was low for all diet types. So the study reports that high adherence to a Mediterranean diet takes your risk from 1.7%, to 1.2%. But you could see how a journalist would be comfortable reporting on the findings as presented in the summary of the paper. And you could understand if the audience takeaway was Mediterranean diet means a significantly lower chance of dementia.

The Data from the Study

But let’s take a closer look at the data reported in the study - and what data wasn’t reported. Below is the main graph from the paper.

In the study, they measured how well you followed a Mediterranean-style diet, based on self-reported data, and scored using three different scoring systems. MEDAS, MEDAS continuous, and PYRAMID. All different ways of trying to measure the same thing.

Error Bars and Confidence Intervals

See the horizontal lines coming out of the dots. Those are the error bars. They illustrate the confidence interval of the result. See where it says 95% CI - that means that based on the sample size and the margin of error the actual value will be within that confidence interval with a 95% probability.

Just looking at the graph, it’s possible from this data that the opposite is true. If the PYRAMID is a better scoring system, perhaps a moderate amount of butter and sausages is significantly protective against dementia while stricter adherence is detrimental.

He’s another less obvious point. The hazard ratio here illustrated on the graph by the dashed line is based on the “low MedDiet adherence reference group.” But they don’t give us the confidence interval for that. If we assume it has a similar magnitude to the medium adherence group it would look something like this.

Which makes it much more clear that really all the different diets could be equal.

Multiple Scoring Systems

Another issue is that they tested the same thing - how well you followed the Mediterranean diet - using three different scoring systems. MEDAS, MEDAS continuous, and PYRAMID. These are all very similar scoring systems that are trying to measure the same thing. Yet only one of these scoring systems suggested that high adherence is protective within a 95% confidence interval. The scoring system that found that medium adherence was worse than low adherence was left off the main graph - but they did show the full data set later in the paper.

Why is the data split into three groups though? Why not 10? Why not one graph showing the improvement the closer you follow the diet? The MEDAS is a 0-14-point scoring system which they’ve broken up into a range of low (0–5.3); medium (>5.3–6.8); and High (>6.8)

In fact, although they don’t offer a graphic, they do have a calculation without breaking the population into three groups. Although it does not show quite as dramatic an effect.

…each one-point increase in MEDAS score was associated with 4.5% lower risk of dementia (HR: 0.955; 95% CI: 0.918–0.993; p=0.021)

Breaking it into three different groups actually presents us with some odd results - as we saw where some scoring systems showed that following the diet at a medium level was worse than following it at a low level.

...when split into tertiles, high (HR: 0.783, 95% CI: 0.651–0.943, p=0.001) but not moderate (HR: 1.023, 95% CI: 0.873– 1.199, p=0.775) moderate (HR: 1.023, 95% CI: 0.873– 1.199, p=0.775) MedDiet adherence was associated with lower dementia risk versus low MedDiet adherence

We’ll come back to the validity of spitting the data into three groups -but let’s first consider confounding variables.

Confounding Variables

Keep in mind, this data only shows the association between dementia and eating this diet. This is not a randomized controlled study where they fed one group sausages. They asked a lot of people what they ate, and based on this self-reported data, saw that people who ate these foods tended to be possibly (can’t say for sure because of the large error bars) more likely to develop dementia. It could be because exercising is really protective and people who exercise eat fewer sausages.

Dig into the study a bit and you see the authors do note some possible confounding variables.

Participants with a higher MedDiet adherence according to the MEDAS continuous score were more likely to be female, have a BMI within the healthy range (<25 kg/m2 ), have a higher educational level, and be more physically active than those with lower MedDiet adherence.

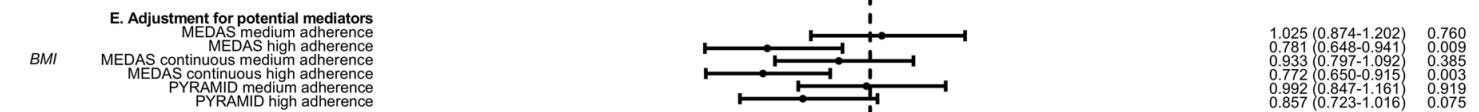

And while they note these potentially confounding variables, they don’t attempt to run a regression analysis to control for them. The study does offer adjustments for BMI and education level (which by themselves expands the confidence intervals significantly). But there is no attempt to do a regression analysis to see the impact after controlling for all of these variables.

Putting it All Together

Keeping in mind everything we’ve seen about the different scoring systems, confidence intervals, and confounding variables we can now put all of that together.

Buried in the supplemental data you will find the actual most important data from the study.

If you input missing data and thus increase the sample size from a population of 60,298 with 882 dementia cases to 196,335 people with 5001 dementia cases and you don’t arbitrarily break up the groups into low/medium/high, and you adjust for people’s pre-existing genetic risk of dementia what does the data say? In that case, actually, adherence to a Mediterraneanrean diet is actually associated ever so slightly with an increase in dementia with all scoring systems. And here all the similar scoring systems agree. 1.008 risk for MEDAS, 1.009 MEDAS continuous, and 1.008 for PYRAMID. Being that close to 1 essentially says that there is virtually no change to the risk factor as you adhere more to the diet.

Summary

A better title would have been “Association study fines eating healthy might slightly increase your risk of dementia. More likely though it makes no difference”

This was part 1 of a multi-part series where we’ll learn about how to interpret research by going through real studies. The next post will focus on publication bias and how to detect and adjust for it with funnel plots.

Very interesting read on the topic - The rise and fall of peer review - by Adam Mastroianni (substack.com)

You've hit the nail squarely on the head here, sir. Well done!